Optimize the Robots.txt File

SEO for Airlines

The robots.txt file tells crawlers which pages they can or can’t access. The robots.txt file is part of the Robots Exclusion Protocol (REP), which is a group of web standards that regulate how bots crawl, access, index, and serve the web content.

An optimized robots.txt file can bring three key benefits for an airline’s site:

- It helps Google maximize the crawl budget of the site.

- It can prevent server overload.

- It can keep non-public sections of a site private.

However, the robots.txt comes with certain limitations:

- It may not be supported by all search engines.

- It may be interpreted differently by certain crawlers.

- Blocked pages can still be indexed if linked to from other sites.

Given the potential for disaster that an unoptimized robots.txt file brings, you must ensure it complies with the implementation requirements.

Airlines that adopt airTRFX don’t need to worry about it because the robots.txt file integrated into the platform follows the best SEO practices.

In this section:

1. Follow the format, location, and syntax rules >

2. Make the robots.txt file return a 200 response code >

3. Don’t use the robots.txt to keep pages out of Google >

4. Don’t block pages and resources that you want search engines to crawl >

1. Follow the format, location, and syntax rules

To start, the file must be named “robots.txt” and a site can have only one. The file format must be plain text encoded in UTF-8 with ASCII characters. It must be placed at the root of a domain or a subdomain, otherwise, it will be ignored by crawlers.

Also, Google enforces a size limit of 500 kibibytes (KiB) and ignores content after that limit.

There may be one or more groups of directives, one directive per line. Each group of directives includes:

- User-agent: the name of the crawler. Many user-agent names are listed in Web Robots Database.

- Disallow: a directory or page, relative to the root domain, that should not be crawled by the user-agent. It could have more than one entry.

- Allow: a directory or page, relative to the root domain, that should be crawled by the user-agent. It could have more than one entry.

Keep in mind that groups are processed from top to bottom, and a user-agent can match only one rule set, which is the first, most-specific rule that matches a given user-agent. Additionally, rules are case sensitive and typos are not supported!

You can also make comments preceded by a # and everything after it will be ignored.

The robots.txt file also supports a limited form of “wildcards” for pattern matching:

* designates 0 or more instances of any valid character.

$ designates the end of the URL.

Avoid the use of “crawl-delay” since Google and other crawlers might ignore it. Specifically, Google has its own sophisticated algorithms to determine the optimal crawl speed for a site.

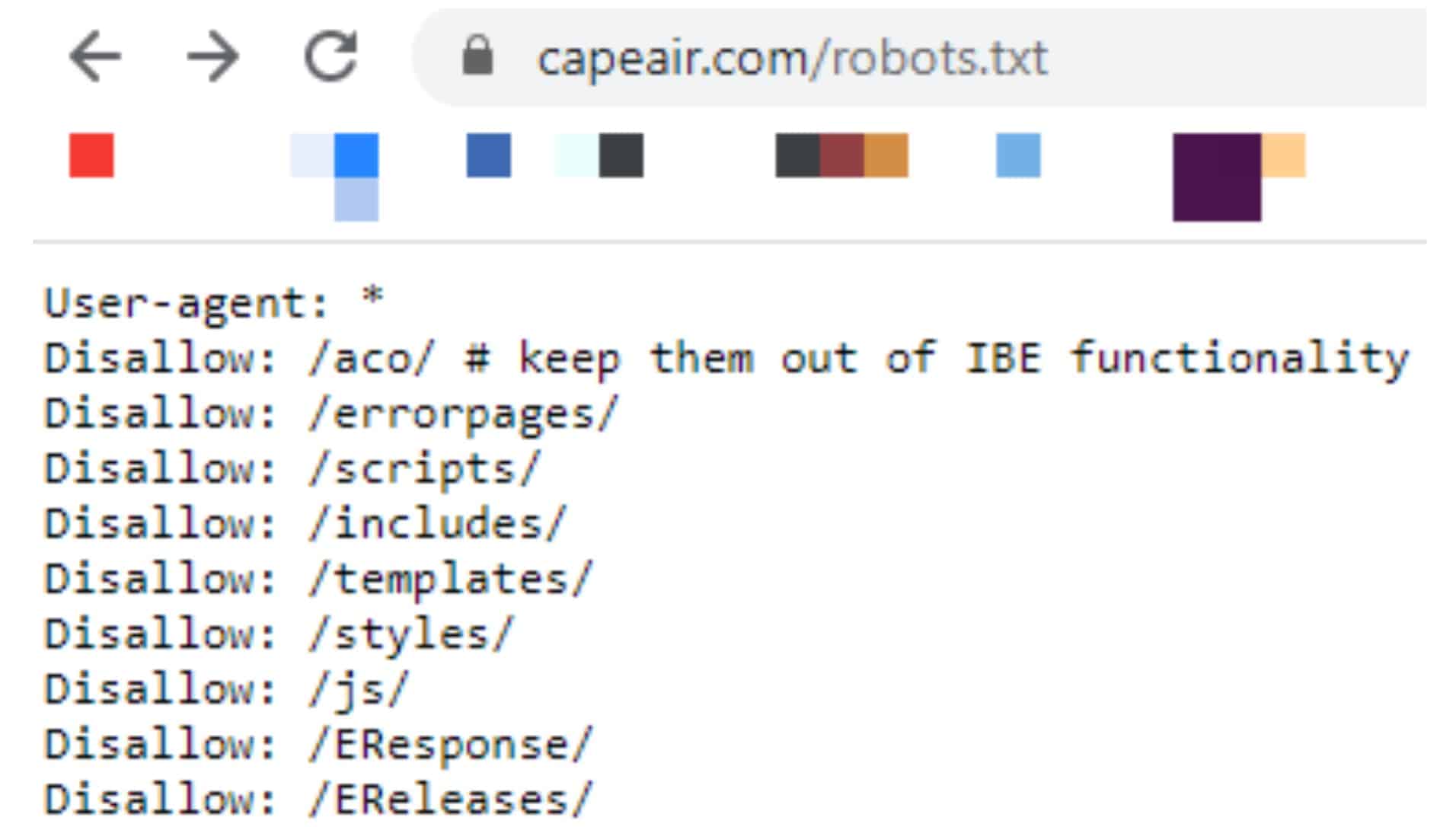

For example, here is how the robots.txt for Cape Air looks like:

Let’s explain the structure of the robots.txt file, based on the example above:

- User-agent: * indicates that the directives are meant for all crawlers.

- Disallow: the directories (e.g. /aco/) indicate the forbidden paths for the crawlers.

- #: a comment meant for humans, ignored by crawlers.

For a full rundown of the syntax rules with examples, check out Google’s robots.txt specifications.

2. Make the robots.txt file return a 200 response code

If you want the directives in the robots.txt to work, the HTTP result code should signal success. Otherwise, Google can treat the robots.txt file differently depending on its HTTP response code:

- 3XX: Google follows at least five redirect hops. If the robots.txt is not found, it will be treated like a 404, which means that there are no crawling restrictions.

- 4XX: Google assumes that there is no valid robots.txt file, which means that there are no crawling restrictions.

- 5XX: server errors are seen as temporary errors that result in a full disallow of crawling. If the robots.txt file is still unreachable after 30 days, Google will serve the last cached version of the file. If unavailable, Google assumes that there are no crawl restrictions.

3. Don’t use the robots.txt to keep pages out of Google

The purpose of the robots.txt is to manage crawling, not indexing.

For example, a blocked page can still appear in search results if other pages link to it.

In another example, if a page or a group of pages are already indexed and you want to remove them from Google, you shouldn’t rely on the robots.txt. This is because the robots.txt does not support a noindex directive.

Instead, use a noindex directive through a robots meta tag or X-robots-tag HTTP header.

Even if you use a noindex directive on a page or group of pages that are already indexed, you should not block the page(s) in the robots.txt. If you do so, Google won’t be able to process the noindex directive!

4. Don’t block pages and resources that you want search engines to crawl

This seems pretty obvious, but it’s worth it to stress it. You want to make sure that the robots.txt is not intentionally or unintentionally blocking resources that search engines should crawl.

This is especially important for JavaScript and CSS files that are essential for the content and mobile-friendliness of a page.

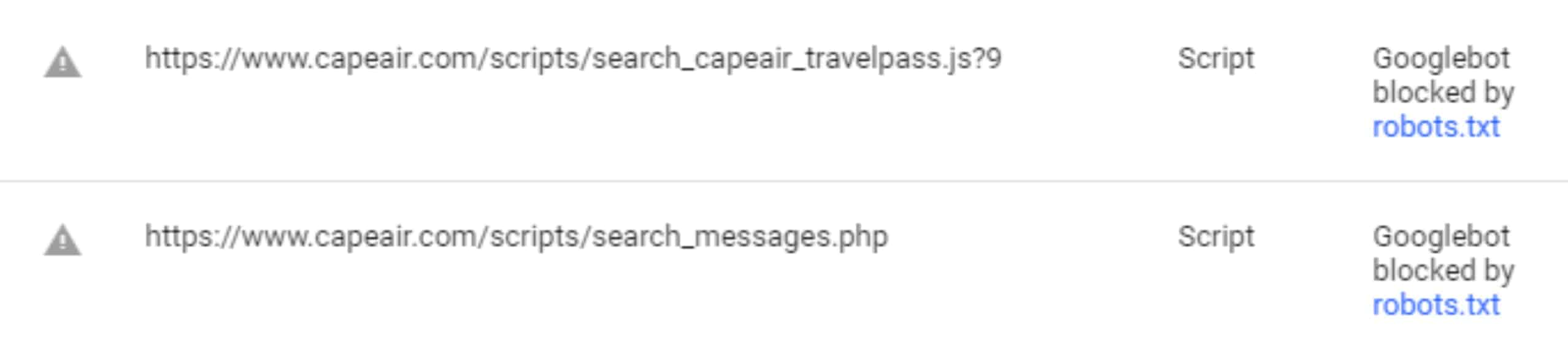

For example, based on the Cape Air example above, it seems that they are blocking a directory called /js/, which is likely where some JavaScript scripts live. You can use the Mobile-Friendly Test tool to verify it. Just submit an URL and click on the Page Loading Info tab:

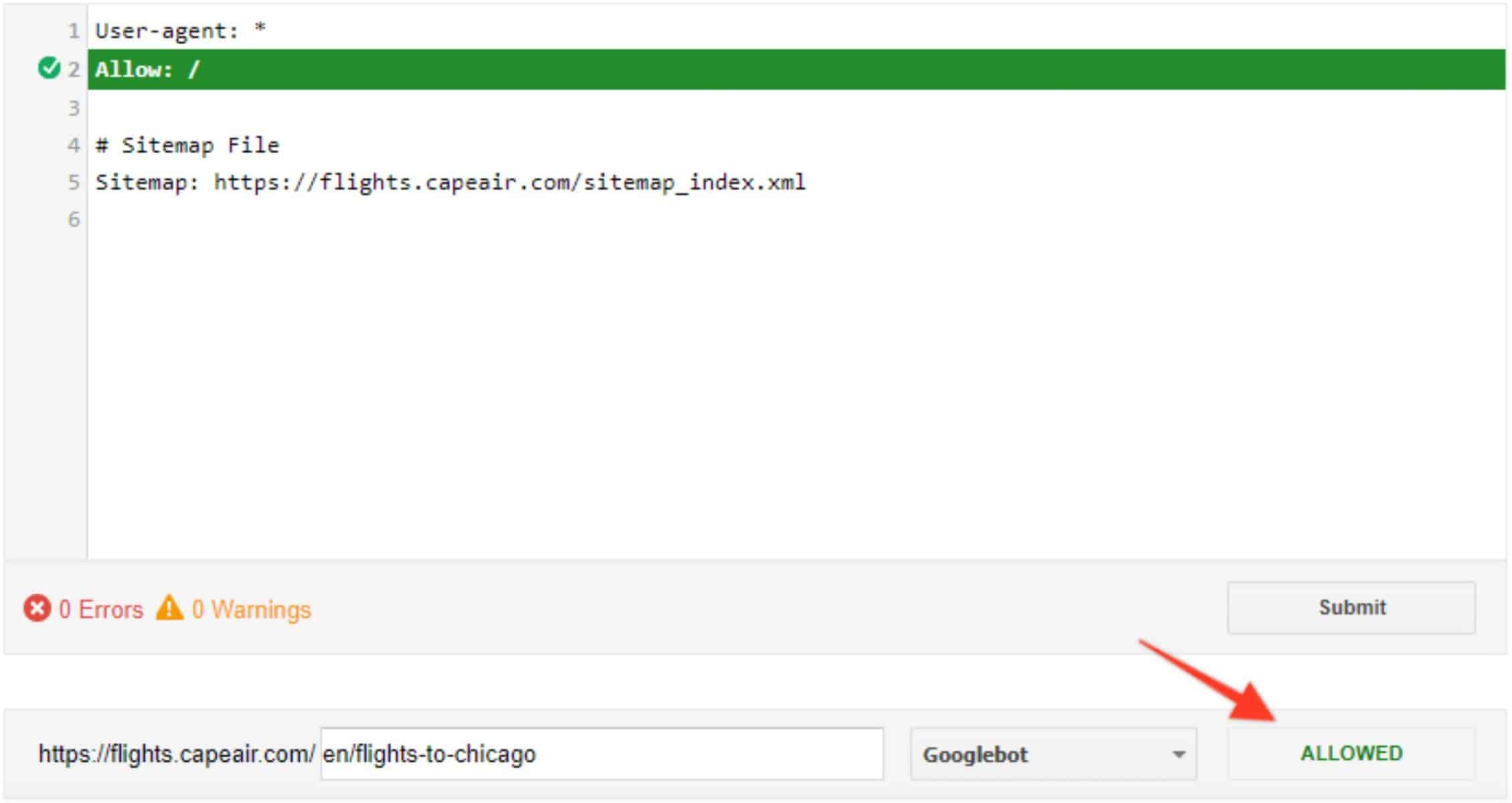

If you want to test whether your robots.txt is blocking important pages, use the Google Search Console Robots.txt tool. In this case, let’s check the Cape Air’s robots.txt file:

5. Maximize crawl budget

The crawl budget is basically the number of URLs that Google wants to crawl on a site. The crawl budget limit depends on two factors:

- Crawl rate limit: the maximum fetching rate for a site without degrading the user experience and overloading the server capabilities.

- Crawl demand: the number of URLs that are important enough for Googlebot to crawl. This number depends on the URL’s Internet popularity and staleness in the Google index.

Crawl budget is not a problem for small or even medium-sized websites with a few thousand URLs.

However, a typical airline’s website is quite large. It has several thousand URLs and, in some cases, even more than a million URLs. Here is an example of how the Coverage report looks like for one of our customers:

There are a number of factors that can affect the crawl budget:

- Faceted navigation and session identifiers

- On-site duplicate content

- Soft error pages

- Hacked pages

- Infinite spaces and proxies

- Low quality and spam content

- And more!

Wasting server resources on these URLs will drain crawl budget from pages that actually have value. You can prevent Googlebot from accessing those URLs by blocking them in the robots.txt file.

In our experience, below are the most common type of pages that should be blocked in the robots.txt of an airline’s website:

- Deep links part of the Internet Booking Engines (IBE)

- URLs with session identifiers

- URLs with parameters that don’t essentially change the content (e.g.: internal tracking parameters)

- Non-public pages (e.g.: staging sites, account pages, admin pages, etc.)

- Search result pages

- Conversion pages

- Print pages

- URLs of scripts that are not essential for a page content or user functionalities

Useful Tools and Resources

- Google on the Robots Exclusion Protocol specifications

- Google’s introduction to the robots.txt file

- Google’s guide to creating a robots.txt file

- Google’s robots.txt specifications

- Google’s specifications for the noindex directives

- Web Robots Database

- List of Google crawlers

- Google’s Mobile-Friendly Test tool

- Google’s Robots.txt Test tool

- Google’s crawl budget guide

- Google’s FAQ pages on the robots.txt file

- ContentKing’s guide to the robots.txt file